In this weeks Canonical Chronicle 84, we discuss:

📱 Why is Reddit everywhere in the SERPs

✏️ The difference between experience and expert content

🚫 Site reputation abuse penalties reveal how “brand” works in the algorithm

🤖 Is Google using Chrome instead of Googlebot to render Javascript?

🐢 More niche sites in the AI Overview

📉 Why you won’t get AI Overview reports in Search Console

Subscribe to our newsletter and read past issues

Get the companion slide deck for Canonical Chronicle 84 at https://email.typeamedia.net

Danny Sullivan Interview

This week Aleyda Solis conducted a 30 minute interview with Google Search Liaison, Danny Sullivan, to understand the future of search. The interview had the usual Google party line commentary about “just making great content” but there were a few gems that indicated the direction of travel for the search engine.

In particular,

- why there won’t be a AI overview data in search consle

- differentiating experience and expertise content

- why there is so much UGC content

Why is there so much UGC content in the SERPs?

Sullivan mentioned that Google noticed that people are more comfortable with UGC content as they feel their is no “ulterior motive” in the content so people are more likely to engage with it. Well, Danny clearly hasn’t met my health enthusiast, content creator and official Herbalife distributor reseller neighbour. All jokes aside, we reported on Google testing a UGC carousel at the top of the SERPs showing the “most recent” content created about major head terms, merging the social media “for you” page with search.

Based on his comments about UGC content, it’s likely that this is going to become more common in the SERPs. So as SEOs, it’s going to become important to connect up your owned brand profiles across different social networks. The easiest way to do this is with Schema ‘same as’ on the homepage of your site.

This also extends to your content strategy. How do we build in experience into the content we are producing for clients and how do we utilise rich media across platforms like Tik Tok and Reels that helps us rank, builds the clients brand presence and still comes across as authentic?

For some brands it will be easier than others. Whilst, we might conclude that “sexy” industries like fashion, travel or lesuire would be the easiest to make content, we actually think that the duller industries (like SEO?) will be easier to produce experience content as there are naturally built in informaitonal queries on a user journey. For example, a person buying SEO services, is going to be searching and consuming lots of educational and news content before typing that query. Compare this to a shoe store all you have to play with is reviews and transactional content. Very hard to produce to a high quality and very hard to get any cut through due to the sheer volume of this style of content already on the web.

Differentiating Experience and Expertise

Sullivan mentions that he thinks “it’s weird” to have a blog post about an informational query like ‘how to change your oil’ and then have a lead magnet or CTA at the end to sell you a “car tune up”. We would tend to agree that cross selling services on unrelated content is rarely effective.

Our professional advice is to think of your content intent: conversational, informational, transactional. Conversational content is not going to convert anyone. It’s top of funnel, very generic and high volume. We recommend using this content to drive awareness and create a big pool of clicks to power your remarking campaigns. Informational content is also not going to convert anyone, at least to a sale. We want to push informational content consumers into an owened channel like email subscriptions or social followers. Then we have transactional content. We want to sell baby sell.

Why there won’t be an AI overview tab in GSC

Sullivan said there are no plans to split out AI overview traffic in Google Search Console, citing that they don’t do it for featured snippets either and that it would be too hard to produce due to the technical debt it would produce.

We need to call BULL on that statement. If every rank tracking tool can deploy AI overview tracking within a week of it going live, Google certainly can do it too.

The real reason why there won’t be any official Google reporting for AI overviews is because, like featured snippets, it creates a zero click experience for the owner of the content that Google is ripping off. Telling webmasters that not only are you stealing their content to power AI overviews but you are also decreasing the traffic they were receiving before is bad for business. Google need to have AI overviews live long enough for mass adoption but not so long that we all wise up and realise that we are getting screwed.

Now if you don’t want your content to be used in AI overviews, you can opt out. However, that also opts you out of all of Google search. Kind of like a robber saying, you can opt out of being robbed by not owning anything. Nice!

Site Reputation abuse update kicks and kicks out big names from the SERPs

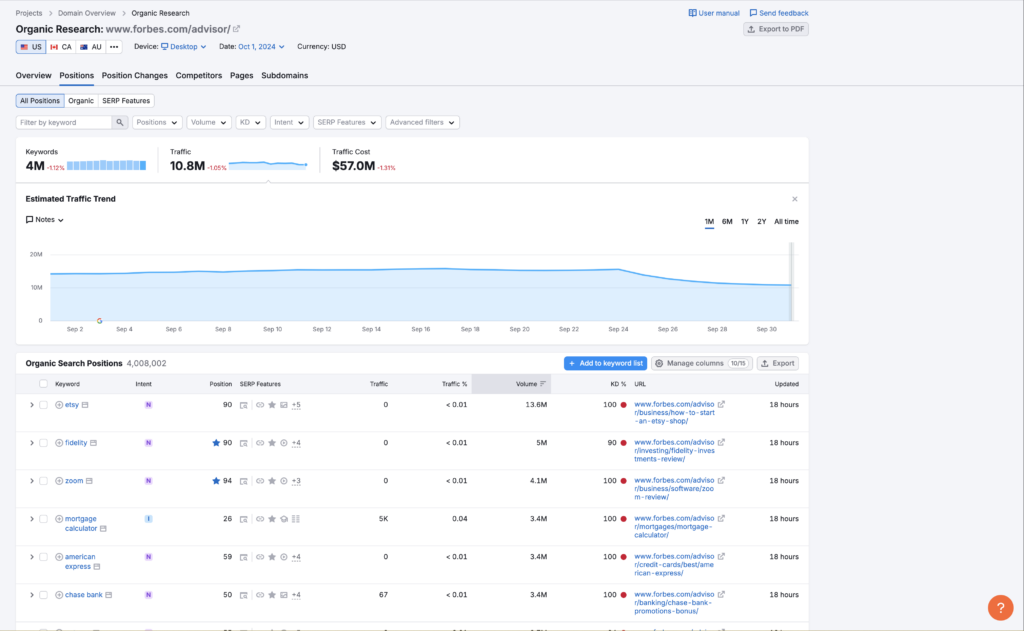

Last week we reported that Google updated a line in their spam documentation about site reputation abuse. Then this week we saw massive decreases in traffic across sites like Forbes Advisor, Reddit, Quora and Linked In.

Whilst, I’m sure we would all like to have a moment of schadenfreude at these sites, it’s more interesting to dig into what Google have actually done to these sites to better understand the algorithm.

The most interesting thing we discovered was related to Linked In Pulse. For those that don’t know, Linked In pulse is the blogging platform that exists on Linkedin.com/pulse/. Prior to this update it ranked for lots of YMYL terms like the medication “semigutide” and it was used very successfully for parasite SEO, ranking for ridiculous things like “private investigator near me”.

These rankings have now been wiped out but that’s not the interesting bit. They also no longer rank for their brand name.

Pre-update when you searched for “linked in”, Linked In Pulse would show in the SERP in the top 5. It’s now not in the top 100. This would suggest that the subfolder has had all the brand power associated with the domain completely removed. Kind of like what happens when a blog is on a subdomain and not a subfolder. It’s likely this also happened to Forbes Advisor with the /advisor/ subfolder being simply treated the same way a subdomain is treated.

Is Chrome just Googlebot_javascript?

Cindy Krum of Mobile Moxie released a 40 minute presentation about how Google collect information from various assets, including Chrome, to influence search results.

The part that is causing the most discussion online was the assertion that Chrome is being used to render javascript. So instead of Googlebot rendering the javascript on Google’s servers, they are instead using individual users computers to render javascript and then store that. Effectively, outsourcing the compute power to build the javascript index to the individual users computer.

It would certainly make sense as to why Chrome is such a bandwidth and RAM hog but it’s a very big leap to assert that Google has made Chrome like a secret crypto miner but for javascript and that we are all unconsciously and without consent helping Google build their index with our own machines and electricity.

The presentation is well worth a watch, you can find it here.